Here is your stack of salt for reading or watching data content

You will need more than just a few grains

Hey, welcome back. Maybe you have already read some of my content, or it is the first time. If you have read/watched something I have written or recorded - thank you for keep reading it.

But here is a warning - I might have influenced you. So let's hope it was for a good cause if I did.

First of all, it is a great thing when people share:

- learnings

- thoughts

- rants

- tutorials

- reviews

about data setups, methods, frameworks, or projects.

Only when we are open to sharing our knowledge, we make data benefits accessible to as many people as possible.

This, at least, is my ideological agenda for why I share data content. Let's call it the rational mind and goal. We will get back to it.

But humans are complex; we rarely act based on one clear, rational goal. We have a collection of agendas when we do things.

And so is everyone who is creating data content. Myself included.

In the following, I am trying to give you different grains of salt that you can apply when you read a text to add perspective to a piece of content. With that, you can better put content into the proper context. Which doesn't remove any value from the content - even better, it adds value.

To make it easier to read, I will use myself as an example and share my different agendas influencing my writing and recording with you.

Agenda 1 - what do I sell?

If you want to add just one context layer to a piece of content - that is the one.

Check the author's job and company.

Here is mine:

I am the founder of Deepskydata - now the tricky part because it is in flux at the moment:

- Deepskydata (from the website) is a platform that offers free data videos

- Deepskydata (from network knowledge) is offering consulting and freelance services around tracking, analytics, and data engineering

The second one is easier - I produce content to find and build trust for future and existing clients. You will rarely find any call to action in my content to my consulting services, but I can tell that the content helps me a lot during an acquisition process of a new project (leads who know my content are more likely to convert).

The grain of salt is that I write this kind of content to position myself in a particular way for future projects. To some true degree - I usually write about things that I already do as projects or that I want to do more in the future.

So any founder creating content is doing this to some degree that it positively impacts their product or service.

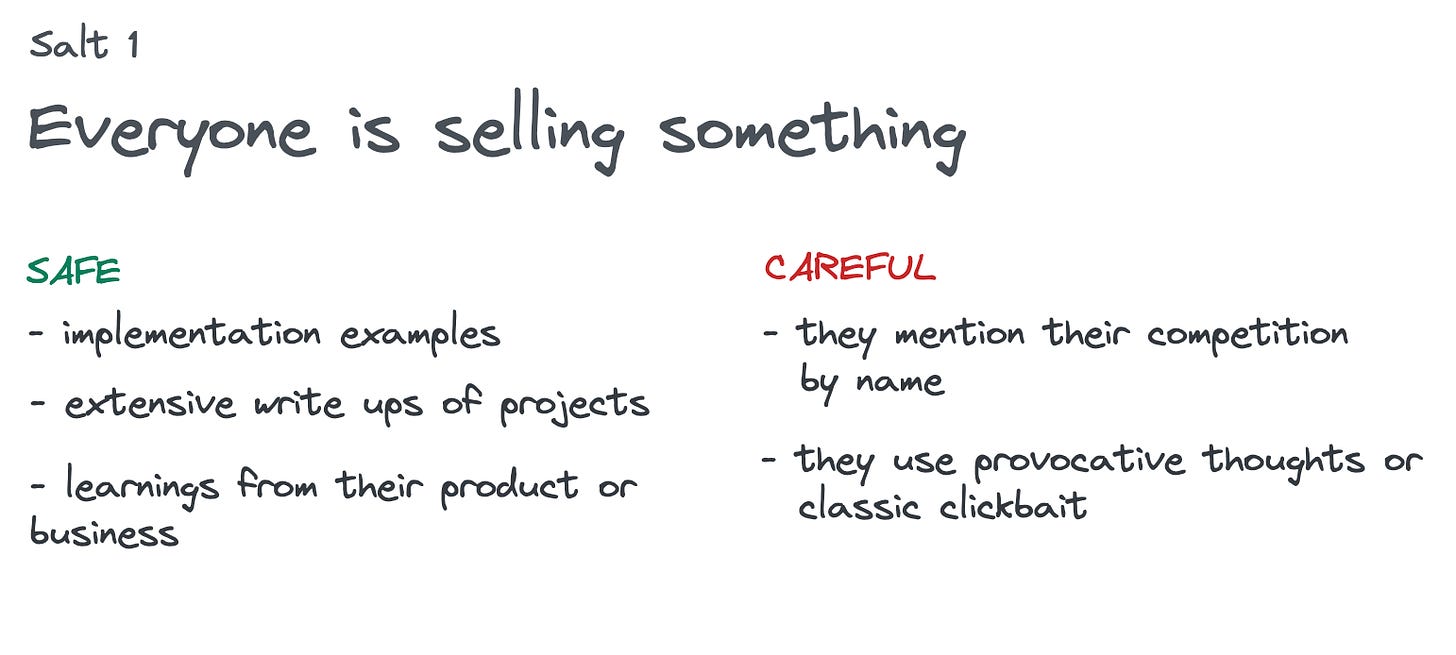

And this is fine if the content is about:

- implementation examples

- extensive description of projects (extensive - not just showing the sunny sides)

- learnings from their product or business

Be careful when:

- they mention their competition by name and put their product above them

- they use provocative thoughts or classic clickbait - this is often aimed to set their product and service apart from the "usual" way - it can make sense, but read it with plenty of grains

Agenda 2: how deep is the knowledge?

This is much more tricky to find out. Sometimes people ask me how to test and write about many different products.

The answer is simple because my depth of knowledge varies significantly between them.

I have ~5 products that I know on intense levels. The reason is simple; I have worked with them for years and on multiple projects. My knowledge is based on experience (and the extra effort to test out edge behaviors and ideas).

Then plenty of products have enough similarities with the five products I know deeply.

I have a deep understanding of Amplitude, which also gives me a very good knowledge of Mixpanel, Heap, Posthog, and new kids on the block like Kubit and Netspring.

Why? Because the underlying principles of product analytics are similar across these solutions. Details and focus topics are different, but I can start from level 30 to learn about these.

When I write content about a specific product or approach, I only do that when I have some experience.

Some experience means, as a minimum for me, I did an implementation already end to end. Not always for a production environment - often, I use sandbox environments.

When I write about thoughts or ideas, it is based on plenty of experiences, but the connection is not always visible. I will try to make it more transparent in the future about what foundation has led to the thoughts and ideas to make it clearer for the reader.

Good signs of knowledge:

- code samples (beyond simple hello worlds) - someone shows how they implement things

- understandable concept drawings - to create a concept drawing, it requires quite some effort to drill it down to a simple visualization

- a clear description of a setup and steps/scopes of work

Pick your grains when:

- when people skip complex steps by mentioning them with 3-4 words - like "then you model the data" - spotting these complicated steps is harder if this is a new topic. But I use this method - when you get ??? floating around your head when you read a simple sentence - the author likely has underplayed the complexity.

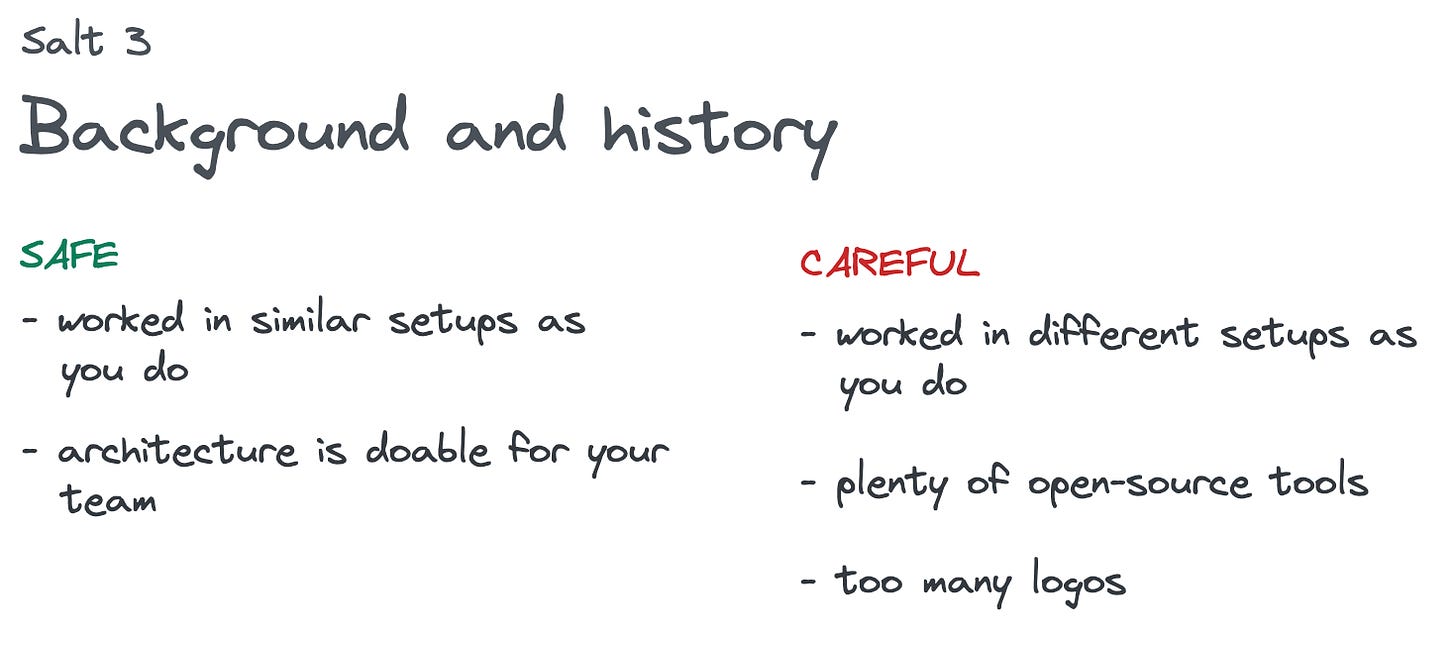

Agenda 3: What are their background and history?

This sometimes can also be not so easy to figure out.

The background shapes a lot about which products and approaches people write about. Because most of the time, we write based on our experiences.

Take me as an example:

I usually worked for smaller startups, so my tracking, analytics, and data engineering background is based on smaller and leaner setups.

I never worked for an enterprise with 1000 different data sources that needed integration, and I never worked in an actual streaming environment.

So if you read my content and think: Does this work in a big enterprise organization, you are right - I can't tell you because I don't know. You have to make the transfer yourself.

And this also goes in the other direction.

Plenty of data content is influenced by companies and people who worked in FAANG or similar environments. I don't know why these groups have a high content output, but they have.

The problem is that their approaches worked well for them in their setups (and they are remarkable based on the scalability and streaming parts), but they are over-engineered for the rest of us.

Things to watch out for:

- high focus on open source solutions (there needs to be someone to keep them up and running)

- complex architecture diagrams

- anything about streaming (Kafka, Flink) or heavy data transformations (Spark)

This content is still interesting to read but more as a guide for a future state where you have the same problems as high-scaled companies. And not as a blueprint for your next year's planning.

With these three types of a grain of salt applied to data content, you will get a lot more from the content you read or watch about data.

To finish:

What is my agenda when writing this:

As noted above - my idealistic view is:

Data content can help:

- to provide you with real solutions to your implementations - giving you a shortcut

- provide you with plenty of contexts, so you can adapt solutions to your setup and learn the mechanics

- broaden your views with new approaches and solutions

So I get easily triggered by content:

- that is mainly written for awareness (like XY is dead/broken) but is not providing any clear value besides useless rants

- that is practically relevant for a small audience but six sizes too big for everyone else.

So I was trying to write a small but practical guide for consuming data content. I hope this helps.

Let me know if you have any different approaches to data content.