More than 30 unique tracking events will cause you problems

The easiest way to increase data adoption, productivity and decrease quality problems

I really have a problem here. People don’t take me seriously about my take on too many unique events.

Ok, maybe I was a bit too much clickbaity in the past. Here is my video - You only need eight events to track your business.

But to be honest. This is also still true. For core business performance, eight events are usually sufficient.

Let’s look into some definitions

Unique events

But what are actually unique events:

An event usually has an event name, like “account created” (or a different notation if you use a different system).

When I talk about unique events, I mean unique event names.

More than 30 unique events

I haven’t done any qualitative research here. It’s more of a threshold based on experience. Maybe 40-50 events will still work out for you. But from all the projects I was working on, beyond 30, the problems started taking place, which I will describe in a second.

The problems with many events

The Analyst productivity dilemma

If an event is not analyzed, it is useless (unless it is used in automation).

The typical analysis scenario is a classic exploration scenario. You start with an idea or a curiosity. Based on that, you open up your analytical tool of choice (a product analytics tool, a notebook). Now comes the first critical question: On which event data can I base my exploration?

Let’s take a real-life example. You are a Saas company with a free plan. Your current conversion from the free to paid plan is still quite poor. And you want to determine if there are conditions where free users are more likely to convert to a subscription.

So you would build different funnels starting with if a specific feature has been used and then if they converted to a paid subscription.

You will start with a feature list from your product sorted by what you think are the essential features. And now you quickly want to build out different funnels.

But this requires that you find the right event for “a feature has been used,” and a subscription has been created.

If you have a focused setup with around 30 events, finding potential candidates for the analysis should not take too long. At least the chance is low that there are multiple ones.

Finding the right events in a 150-unique event setup will take plenty of time. Because you often end up in a scenario where you discover multiple candidates for the right event. These candidates sometimes have quite similar names (subscription created, subscription submitted, create subscription, subscription started). So you first analyze all candidates for volumes and dependencies to rule out unlikely ones. And hopefully, end up with one promising candidate. And if not, your last resort is a debug session or speaking with a dev to figure out where the different events are triggered.

This process I call the analyst productivity dilemma. You are highly motivated to find clues to improve the product but need to spend days and sometimes weeks even to get started. It’s like using a search, and the first message is that a specific index for this question needs to be built, and you need to come back later.

The documentation excuse

The most given answer to this problem above is: you need better documentation.

And it’s true, really good documentation would solve this problem. You identify candidates for the right event and then check the documentation on how they are defined and how they are triggered in an ideal world. This whole process takes you some minutes, and you are ready to start.

But let’s be honest - if our answer is: better documentation - we are most likely doomed.

Yes, it is possible to have really good documentation. But this requires a lot of resources, strict processes, and documentation monitoring. And yes, there are tools like Avo that help significantly with it. But still, when a developer not clearly states how she/he has implemented the event, the question marks don’t disappear.

And now, think about maintaining this documentation for more than 30 events. This already requires a team.

The monitoring problem

In a serious setup, you would monitor your events for two aspects:

- syntactical correctness

- volume changes

The first tells you if a new release suddenly has changed the event name slightly. The second tells you if there is a potential triggering issue when the volume drops or increases significantly.

All monitoring is doable. Syntactical with a tool like Avo, volume with any data observability tool (where you potentially would have to write a test query for each event - have fun with 100 or more events to do that).

But when you start to receive alerts for more than 100 events, you need to figure out when and how to react to this. Alerting on volume always creates noise - more events, more noise.

Why do we create many events?

The legacy problem

I would say the major reason for plenty of events is “generations” of ownership of the events. It’s easy to define and implement an event. But when time moves on and the documentation is not precise enough. There comes a day when someone else needs a similar event. She finds the existing one but is unsure if it covers what she is looking for. Even the devs can clearly find an answer. So to be sure, they implement a new event (for the same case) at a slightly different in the code base. And this process can repeat itself multiple times.

I worked on setups where we had over seven instances of the same event just because of this.

The ownership problem

In a small startup, it should be unlikely, but I saw this even there. Multiple teams require event data. And the use cases and preferences are usually different. When there is not one team that is responsible for the event tracking, you will pretty sure end up in chaos.

But even with a team that owns the event tracking, aligning all the requirements is a huge effort, and you always risk a severe bottleneck situation.

How to avoid too many problems

By design

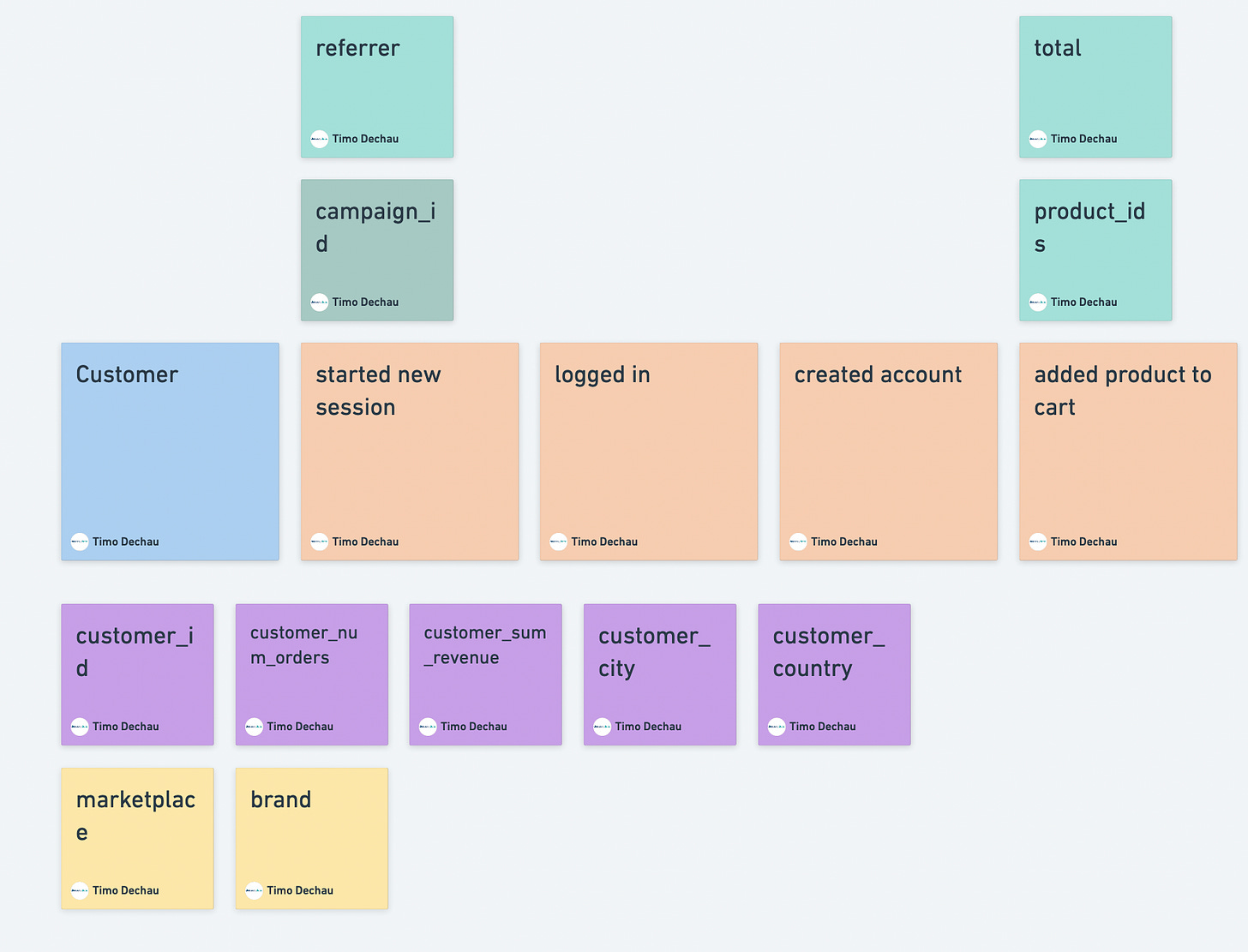

A good tracking event design is the best way to prevent too many events. My approach is to develop a source-agnostic event concept that is built around the customer and product/service journey and choose a high enough aggregation level.

That means breaking down your product/service journey into entities that make the product.

So for a task management tool, you might have these entities: customer, user, task, list - that’s it.

Make sure that you work a lot with properties to reduce the unique entities.

For each entity, you then design the activities that matter. These usually cover the lifetime of an entity. So customer lifetime activities, task lifetime activities.

With these approaches, you will end up with 20-30 events.

But what about these granular events that are irrelevant to business questions but important to evaluate the specific feature usage? E.g., how a list got sorted. Treat these in catch-all events like interaction clicked (element-type: sort, element-value: due date desc, element-text: sort by,…).

When you provide enough context in the properties, these events work perfectly for quick feature evaluations. When they become more important, upgrade them to proper product events.

By ownership

We had this already before. One team (or person when you start) needs to own the tracking event schema.

The major challenge for this team will be not to become a bottleneck. A good design can help with that.

So when a new tracking event request comes in, you check:

- is this event already covered by the core customer or product events, and is maybe just a property missing to serve the exact use case? Extending and growing properties is less of a problem

- if not, introduce the event as a catch-all event and show the team how they can work with this event (how to filter it out from the other catch-all events)

Besides new events, you are also responsible for event maintenance. Check regularly how the different events and properties are used in reports and analyses. Can they be improved or even reduced? If yes, do it. An event less is a big productivity win (see above).

Embrace the lean event approach.

I have seen many problems with tracking data quality and adoption that had their root cause in the event design.

Based on my experience, you can drastically increase data productivity if you invest time and effort into data creation. And limit the unique event count is a really good measure for this.

What are your experiences with high event volumes? Are you skeptical about reducing them? If yes, let me know in the comments.

Lean event approach FTW!