Quo vadis, Data Open source

A case study and review based on the examples of Snowplow, dbt, Rudderstack, and Iceberg

I have two close friends, and we initially bonded over one book series we all have read. The books are Daemon and Freedom (TM) by Daniel Suarez:

https://www.amazon.com/dp/B074CDHK46

Set in some near future, a genius game developer is setting loose a Daemon program after his death, which, step by step, disassembles the capitalistic world and power structures. And promotes instead autonomic communities and knowledge sharing across these. The book, interestingly, is pretty violent, but yeah, there is no peaceful revolution in the catalog, I guess.

To quote from the Amazon book detail page:

Daniel Suarez's New York Times bestselling debut high-tech thriller is “so frightening even the government has taken note” (Entertainment Weekly).

But the message resonated a lot with us. To have an algorithm that provides everyone with a leveled playing field and promotes (and enforces) collaboration over harmful competition

If all this would just be so simple.

The current state of open source in the data field

I would like to look at the current state not abstractly but using four examples of different open-source approaches in the data space. We can use these examples to learn more about the different characteristics of the approaches, what worked well, and what is complicated.

Naturally, this is an open-source is dead or whatever post. It is an examination by observation from the outside. The outside is important here. I have no exclusive insider value since I never worked on any of these projects. My observations are based on the visible interactions based on posts, videos, announcements, pricing, and my experiences working with the tools.

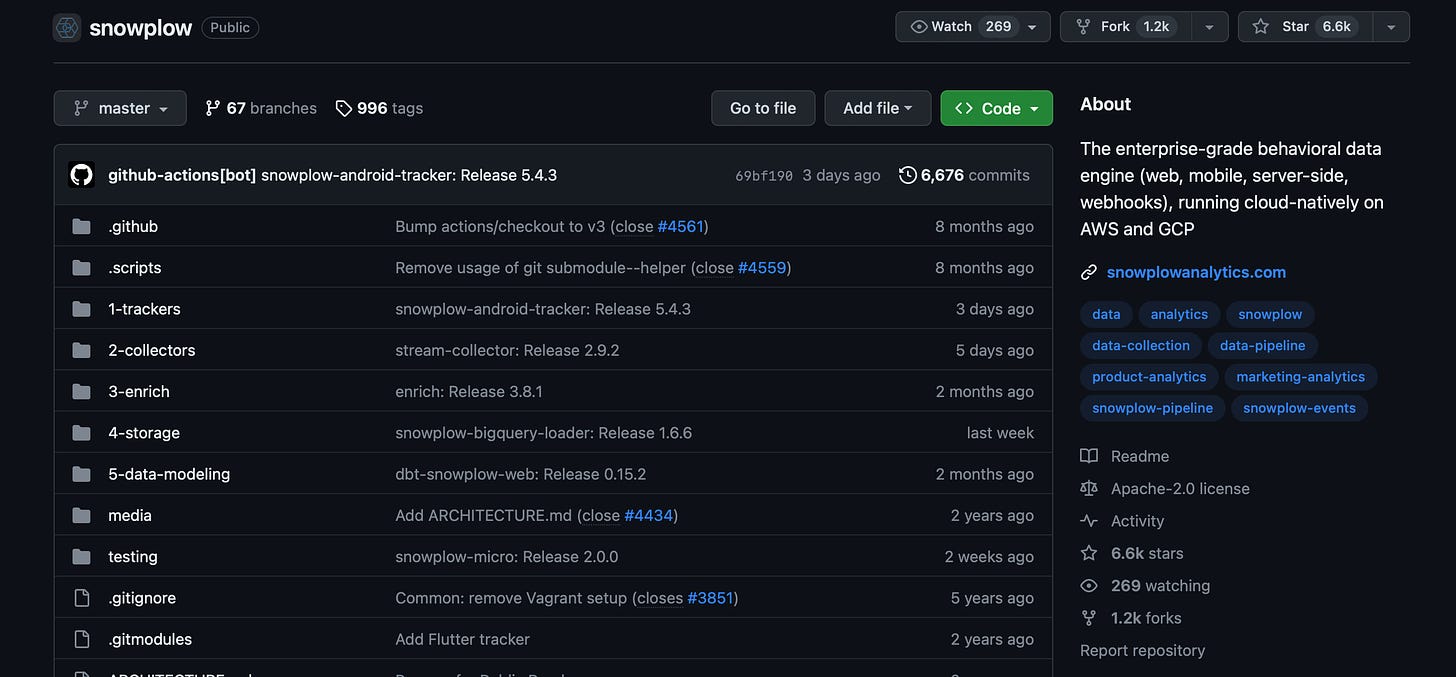

1 - Snowplow

I don't know which is the oldest open-source data product out there, which is still in heavy use. But the one I know and have worked with (almost from the start) is Snowplow. Using Snowplow, you can build an event data pipeline that can receive events from practically everywhere, handle schema validation (hello data contracts), and sink the data into either Redshift, Snowflake, Databricks, or BigQuery.

When I discovered Snowplow for the first time, it was like finding a hidden, beautiful island. An island that was hard to get to and also hard to navigate once landed. But when you manage it, you have an excellent, reliable, transparent, and scalable event data pipeline solution. And the great feeling that you just have set everything up and feel a little bit like the god of this island.

This is the power of open source, and Snowplow is still one of the outstanding examples for me.

What characterizes Snowplow:

They had the open-source offering as a stand-alone for quite a long time.

They introduced a clear enterprise product with the BDP - which is a managed Snowplow instance in your cloud account. So, the upgrade scenarios from open source to managed are quite clear:

We don't have the resources to manage the pipeline.

The event data from the pipeline becomes so important for our business that we can't afford hiccups.

The open-source product until today is still close to the BDP from a feature perspective. It is widely supported and maintained by the Snowplow team. And when you talk to the engineers, you can hear the passion for the open-source product.

Setting up and maintaining the open-source version is not easy and requires either grit and passion or an ops team that does this for a living. This makes the OS version definitely not a free version where people can test things out.

The requirements that take you from the open-source solution to the BDP are quite special and narrow. This makes revenue development difficult. One reason why Snowplow is experimenting with different additional offers like the cloud offer (more affordable BDP) and a digital analytics package.

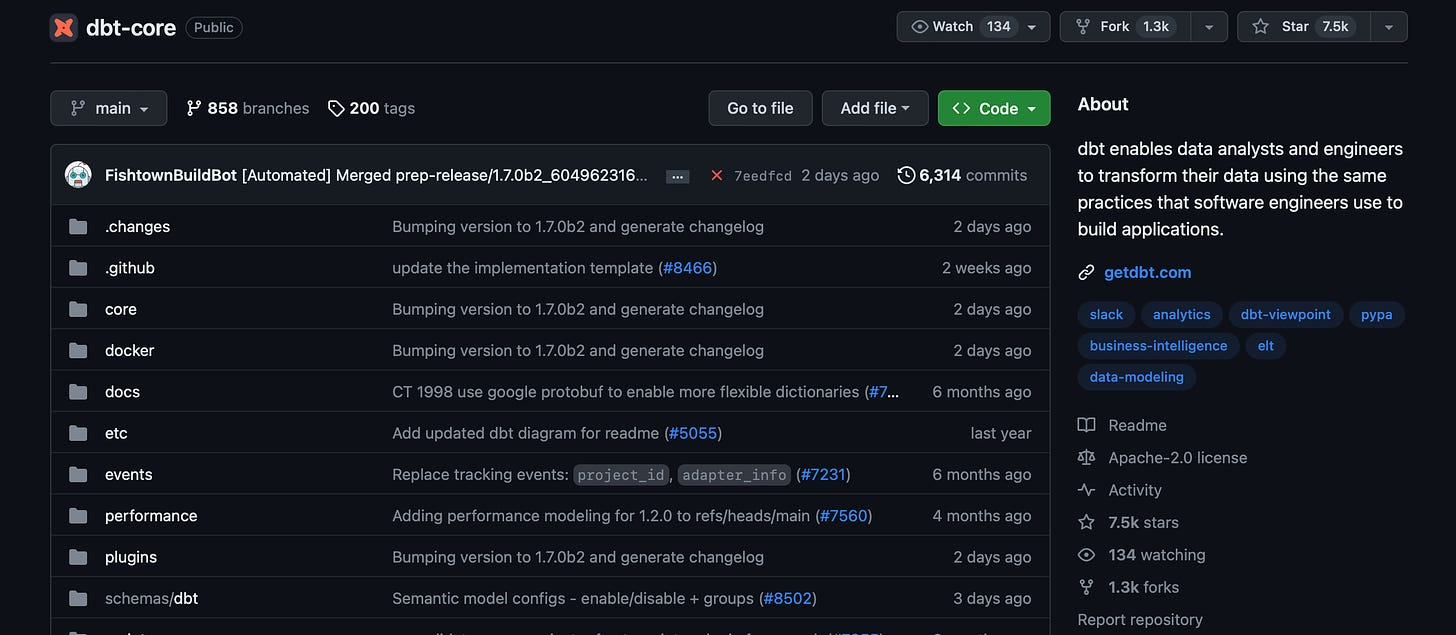

2 - dbt

Just 18 months ago, this might have been the poster child for a successful open-source and community enablement. Create the buzz with an open-source solution that solves a real problem, then create a group feeling based on the pain people have to get from raw data to insights and make it a community. And then create a new job role and level these people up as engineers. This has played out exceptionally well.

Until it was time for a go-to-market strategy.

Here comes the caveat:

We can't simply call all this what happened before (OS, community, conference, evangelism) a go-to-market strategy. Just because there was no market involved (when we define a market as a place where buyers and sellers exchange goods and services for money.) It was more a go-to-attention, go-to-adoption strategy. And this one worked out like a poster child.

But as long as a big company or foundation does not fund you, you must find a market.

So far, this seems pretty hard. dbt tried the managed upgrade approach.

They offer a service on top of the OS solution that provides more benefits to data teams. One essential problem here is that the benefits are too marginal. I still see the opportunity here since data teams have many problems working with SQL models. Plenty of opportunities exist to provide a dbt cloud that makes people open up their budgets.

The tricky thing about "when you pay us, you get such a better experience" is that it is extremely hard to find the right angle for it.

In the web frontend space, GatsbyJS failed with a managed approach. They tried more of a lock-in-based approach and put essential features behind the paywall. Vercel with NextJS instead succeeded. They played it open (you can deploy NextJS everywhere - quite similar to dbt) but focused heavily on building a best-of-class developer experience.

In dbt’s case: Running dbt build jobs for you only improves a 5% developer experience.

I don't understand why dbt was not doubling down on the editor and making it the most ground-breaking developer experience. This would have earned them more fame and paying users. You can see the impact now with the emergence of pure dbt editors like Paradime or Deep Channel.

What characterizes dbt:

A masterclass of kicking off an open-source project. Yeah, it was a timing thing. But they did really good things. The initial office hours with Claire Carroll were extremely good and helped create the "we are in this together" vibe the community had initially. So, there are good learnings for others to pick up when launching an OS project.

A managed upgrade that was too weak to provide a better developer/analyst experience and, therefore, had a low pull impact.

There was no focus (at least from the outside), sidetracks with adding a metrics layer, and then not - sometimes, I thought that the real goal of the company was to create the most significant and most relevant data conference (which it definitely was). Imagine what could have been achieved if they had spent all their people and money power on just building the most excellent editor.

Again, from the outside, there is no clear go-to-market strategy. What would an enterprise dbt look like? How to make it irresistible for CIOs, CDOs, and CTOs to buy and drop it on the organization.

The dbt approach became the blueprint for most OS-based data tools for the Modern data stack. For the good or the worse. This is one of the reasons we look at OS skeptically at the moment since plenty of companies out there are already running or will run into the same problems as dbt.

3 - Rudderstack

Rudderstack is a really interesting one. And I can tell it's a bit of an emotional journey for me. Rudderstack launched as an open-source version for Segment (quite similar to Airbyte launched as an OS Fivetran alternative - the similarities stop here since Rudderstack is extremely good in execution).

The open-source version was extremely close to Segment's solution (theoretically, you could simply switch the endpoint URL from Segment to Rudderstack but keep your whole implementation). It was designed and launched with one clear goal: Make it as easy as possible to replace Segment. This is, by the way, a GTM strategy.

But for me, it felt a bit strange. For me, initially, OS was more about taking an existing proprietary solution and creating something better for free (free cakes on Idealism Island) - Jitsu (launched roughly at the same time) took that route. But in the end, Rudderstack played the initial Linux game (everyone who disagrees, please let me know; I am no expert here). Linux was foremost making Unix systems affordable for the masses.

Rudderstack also launched a managed service pretty quickly—the first promise: we take care of the hosting for you. And therefore opened it up for a lot more use cases and audiences.

And then, the open-source and managed versions diverged quickly from the feature perspective. It was obvious that this gap would increase over time.

This was my second struggle with Rudderstack - I felt a bit betrayed by my OS image. Working with Snowplow before had me in the camp; you provide an OS version that is almost similar to the feature set, just harder to set up.

But I came to terms with it. Their open-source offering is just the core of the service for all teams happy with the core and want more control over the setup—a clear, focused solution for a small audience. What also changed was their communication. Today, you have a hard time finding open source on their website.

And they are still committed to their open source core - the repo is well maintained.

What characterizes Rudderstack:

Looking back, that OS was a clear GTM strategy. We are here to replace an existing vendor with a cheaper, more transparent solution.

And on top of it, build out your real (money-making) service. Then, use this money to invest in sales and growth teams and initiatives. And then start to overtake with new features.

But keep Open Source as a core offer and give back to the community.

If this continues to play out like this, it is not so far away from how the big ones like Facebook or Google approach OS (on a much smaller scale). We make money with managed service (or ads in FB's case), and we have OS as giving back, hiring, and goodwill offer.

This is not the idealized version of open source, but it is still open source.

4 - Apache Iceberg

Now it is getting interesting. I want to start with some history and context also because I did not know it and read it up.

First of all, what is Apache Iceberg? It is not my area of expertise, so I take the definition from Iceberg's website literally:

"Iceberg is a high-performance format for huge analytic tables. Iceberg brings the reliability and simplicity of SQL tables to big data, while making it possible for engines like Spark, Trino, Flink, Presto, Hive and Impala to safely work with the same tables, at the same time."

I understand that Iceberg is one of the formats driving the LakeWarehouse approach. You can use it, for example, on AWS S3.

Here you can learn more: https://iceberg.apache.org

A bit about the history (from Wikipedia):

"Iceberg started at Netflix by Ryan Blue and Dan Weeks. [...] Iceberg development started in 2017. The project was open-sourced and donated to the Apache Software Foundation in November 2018. In May 2020, the Iceberg project graduated to become a top level Apache project.

There are already two things standing out:

Iceberg is, when used, critical infrastructure on the one hand and a standard on the other hand. Both Snowflake and Databricks published initial support for the Iceberg format this year. This is something only possible when you have an open standard (Snowflake might not do the same with the Delta Lake format, which Databricks developed).

Like many data products, this one originated in the work of data teams in one of the big data-heavy services (here, Netflix). But instead of spinning it out and creating a business around it, they pretty quickly got under the umbrella of the Apache Foundation. This does not rule out any commercial future things. Airflow and Superset are also Apache projects and have a managed version with Astronomer and Preset.

All this creates this characterization:

Iceberg is not a tool in the first place; it is an infrastructure part and a standard. Therefore, openness is an essential trait.

It can be maintained with the help of the Apache Foundation

Future commercial models are not ruled out, but it would be interesting to see if and how they would happen.

The impact and enablement is massive. Formats like Iceberg and Deltalake are already and will significantly influence data infrastructure; by that, there are plenty of ways for us to work with them for good. But they will not be the sole foundation of a multi-billion dollar company, which is absolutely fine.

So now, quo vadis Data open source?

This is now very opinionated, and I would love to read your comments on why you think I am wrong.

The dbt use case has shown the problem of combining their strategy with a real GTM strategy. But they provide plenty of stuff to learn for the other data companies.

On the OS and community side: how to launch, promote, and develop an open-source product.

On the business side: What do you need to provide in a business strategy to grow your business and not just GitHub stars? My take: Find your OS audience's real and biggest pain point and focus, focus, focus on building something experience-changing as your managed service.

On the other hand, we will continue, or we may see a growth of open-source projects incubated by big companies and foundations. This is common in software development. Just see what OS projects Facebook is maintaining and supporting, like React. We can see first glances of this by Confluent's move to acquire Immerok, one of the main maintainers behind Apache Flink. This space is definitely something I will investigate more since it is the most solid model for OS projects.

Rudderstack's approach is still an interesting blueprint if you want to shake up a solid category and use it as a clear GTM strategy and then, at some point, treat OS as a contribution.

In a nutshell, I think all companies following dbt's approach need to double down on their GTM strategy. In step 1 - make sure to collect a ton of feedback and insights from the paying customers and not the engaged OS users. Then, isolate the biggest problems and your hypothetical solutions for them and do product work (build, measure, learn). But don’t wait too long to work on your GTM strategy. Look out for early revenue learnings.

Interesting Timo. Agree with you on dbt in the sense that the product didn't have a clear direction between the demand for better UX on the cloud app which they had a huge disadvantage, and the pull towards metrics, with no clear value capture mechanism in that direction? The other products I'm less familiar with so I found your analysis insightful.

Another one to your list is Posthog. At the start they had an open source "demo" version, with certain features pay-walled, they've sunset a few and are now open source on a constrained version of the product.

https://posthog.com/questions/open-source-vs-paid

I found your dbt & Rudderstack observations interesting.

It's not uncommon for startups to go after markets that are not yet mature, because once they are, it takes big budgets to compete. Think how many backlinks the most frequent terms have. If you anticipate well, you can be building them long before the traffic is there. So I think it's pretty standard for a go-to-market strategy to focus on a single growth pillar initially. Be that acquisition. Retention. Or Monetisation. And you may be right about DBT's approach. Looks like they followed Tim Ferris's blue print for how to build a movement. Step 1: Create a phenomena (they did this with a new tool). Step 2: Create a community, they did this with champion enablement. Step 3: Monetise. Which is where you are pointing out that they're struggling here.

I don't have my go-to monetisation diagnosis dashboards with their data infront of me, so I can't comment specifically on what their specific challenges are. But based on your observations, you're saying that their monetisation is broken, because the 'what' do we charge for, isn't enough, therefore adds too much friction. It's possible. I would not call this "they no clear go-to-market strategy" though. I'd say they hit monetisation challenges. When cash is pouring in, this is less of an imminent growth blocker. Think Facebook. But if the macro changes and the need for self sustaining growth loops place more emphasis to get monetisation right, then it's a challenge to be solved. A difficult one, and one that's best viewed as an iterative state until the business finds model / market fit. Reforge have a mountain of material on this, but I agree with you that unless you have deep pockets, monetisation can break a growth loop. Data space is notorious for needing 'market education' investments to take a target prospect and nudge them to 'in-market'. This is a high CAC channel because we're talking about things like enterprise technical content, CSMs, enterprise sales ect. So if the business does not earn enough ARPA or earn it back fast enough, what follows is slow growth. Creating more opportunities for others to spot gaps and fill them.

Re Rudderstack. I just remember that different. But maybe that's just a timing thing. Me coming into it later or earlier, and therefore a different meme sticking. I remeber Segment was born out of open source. And Rudderstack was quick to recognise a few category entry points, and forked Segment's repo before building differentiation. They saw an opportunity to position against Segment as for Cost Leadership. In doing so, they took a different stance on CDP, putting your data warehouse at the center of it. That was either a great vision, or lucky, because it does turn out like the meme stack and is gaining popularity. That's what composable CDPs are. Datawarehouse first. Ie, data warehouse CDP. And for that, Rudderstack were first. If they leaned into that more, I'd bet they'd compete against Segment's mind share more effectively. I tweaked their hope page last night when thinking about how this could be done haha https://www.linkedin.com/posts/rhys-fisher-growth-mentor_cherches-le-creneau-fill-the-gap-french-activity-7104560222995656705-SXMP